From Code to Monitoring: My FastAPI Calculator DevOps Pipeline (Complete Beginner Guide)

In this complete beginner guide, I walk through my FastAPI calculator project end to end: coding, testing, CI with Jenkins and GitHub Actions, and monitoring with Prometheus and Grafana.

In this project, I demonstrate an end-to-end DevOps workflow using a FastAPI calculator, from clean application design and automated CI testing to production-style monitoring with Prometheus and Grafana.

Repository: fastapi-calculator-devops-pipeline

In this post, I explain every part of the repository in beginner-friendly steps, including:

- How I structured the FastAPI app.

- How I tested it with both unit tests and browser tests.

- How I automated CI with Jenkins and GitHub Actions.

- How I added runtime monitoring with Prometheus and Grafana.

Why I Chose a Calculator for DevOps Practice

I intentionally picked a calculator app because:

- The logic is simple and easy to verify.

- The UI is small, so Selenium tests stay manageable.

- I can focus on pipeline and monitoring concepts instead of domain complexity.

- It still covers the full workflow from code to observability.

This project maps directly to:

PLAN → CODE → BUILD → TEST → INTEGRATE → MONITOR

Complete Project Structure

fastapi-calculator-devops-pipeline/

├── src/

│ ├── calculator.py

│ └── app.py

├── templates/

│ └── index.html

├── tests/

│ ├── test_calculator.py

│ └── test_selenium.py

├── monitoring/

│ ├── prometheus.yml

│ └── grafana/

│ └── provisioning/

│ ├── datasources/

│ │ └── prometheus.yml

│ └── dashboards/

│ ├── dashboard.yml

│ └── calculator.json

├── .github/workflows/ci.yml

├── Dockerfile

├── docker-compose.yml

├── Jenkinsfile

├── Makefile

├── requirements.txt

└── README.md

Prerequisites

Before running everything, I make sure I have:

- Python 3.9+ installed.

pipworking.- Docker Desktop running (for monitoring stack).

- Google Chrome + matching chromedriver (for local Selenium UI tests).

Optional but useful:

- Jenkins (if I want local CI).

- Homebrew (on macOS) for quick installs.

How I Map Tools to Each DevOps Phase

| Phase | Tooling I use in this repository | What I do in practice |

|---|---|---|

| Plan | README + scope definition | I keep the app intentionally simple and focus on the workflow |

| Code | Git + FastAPI source | I implement calculator logic and web routes |

| Build | Makefile + pip | I install dependencies and standardize commands |

| Test | pytest + Selenium | I validate logic first, then verify UI behavior |

| Integrate | Jenkins + GitHub Actions | I automate checks on code changes |

| Monitor | Prometheus + Grafana | I observe request rate, latency, and error behavior live |

Step 1: Clone and Install Dependencies

git clone https://github.com/earthinversion/fastapi-calculator-devops-pipeline.git

cd fastapi-calculator-devops-pipeline

python3 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

I can also use my Makefile shortcut:

make install

Dependencies in this project:

fastapifor the web framework.uvicorn[standard]as ASGI server.python-multipartfor parsing HTML form inputs.jinja2for rendering templates.prometheus-fastapi-instrumentatorfor/metrics.pytestfor unit and test orchestration.seleniumfor browser-based UI testing.

Git Workflow I Follow

I keep CI centered around Git events, because git push is what starts automation.

git init

git add .

git commit -m "Initial commit"

For day-to-day updates, I follow:

# edit code

git add src/calculator.py

git commit -m "Add modulo operation"

git push origin main

This push event is what Jenkins polls for and what GitHub Actions reacts to natively.

Step 2: Understand the Application Code

src/calculator.py (Core Logic)

This file keeps pure math logic:

add(a, b)subtract(a, b)multiply(a, b)divide(a, b)withValueError("Cannot divide by zero")for zero divisor

I separated this logic from the web layer so I can test it quickly without starting a server.

src/app.py (FastAPI Web Layer)

This file does four main jobs:

- Creates

app = FastAPI(title="CI/CD Calculator"). - Configures Jinja templates.

- Exposes

GET /andPOST /routes. - Enables Prometheus metrics automatically with:

Instrumentator().instrument(app).expose(app)

That one line gives me /metrics for observability.

templates/index.html (UI)

I keep the UI intentionally minimal:

- Input

a - Operation dropdown

- Input

b - Submit button

- Result and error blocks with IDs:

id="result"id="error"

Those IDs are important because Selenium tests read these elements directly.

Calculator App Screenshot

Step 3: Run the App Locally

make run

This starts:

- App UI at

http://localhost:5000 - FastAPI docs at

http://localhost:5000/docs

Equivalent raw command:

cd src && uvicorn app:app --reload --port 5000

Step 4: Testing Strategy (Fast + Slow Layers)

I split tests into two layers so feedback stays fast.

Layer A: Unit Tests (tests/test_calculator.py)

I test pure functions directly, no browser and no network.

Coverage in this file:

- Addition: positive, negative, mixed signs, floats.

- Subtraction: basic and negative result.

- Multiplication: basic and by-zero.

- Division: basic, float approximation, divide-by-zero exception.

Run:

make test-unit

Layer B: UI Tests (tests/test_selenium.py)

I run true end-to-end browser checks:

- Page loads and title contains

Calculator. 10 + 5gives15.10 / 0shows zero-division error.

How I designed it:

- A pytest fixture starts uvicorn on

127.0.0.1:5001in a background thread. - Another fixture starts headless Chrome.

- Tests use form fields and asserts visible text from

#resultand#error.

Run:

make test-ui

Run all tests:

make test

Important behavior: Selenium tests are guarded with pytest.importorskip("selenium"), so they skip gracefully if Selenium is unavailable.

Step 5: Build and Command Automation via Makefile

My Makefile gives one command per workflow task:

make installmake runmake test-unitmake test-uimake testmake monitormake monitor-downmake clean

I treat this as my Python equivalent of Maven/Gradle-style task entry points.

Step 6: CI Option 1 with Jenkins

I use a declarative Jenkinsfile with these stages:

Code CheckoutBuildUnit TestsUI TestsIntegration Complete

Jenkins Setup (macOS)

brew install jenkins-lts

brew services start jenkins-lts

Then I open http://localhost:8080 and finish initial setup.

Pipeline Job Setup

- Create new pipeline job.

- Choose

Pipeline script from SCM. - Set SCM to

Git. - Paste repo URL.

- Set script path to

Jenkinsfile. - Build and inspect stage view.

Important Jenkins Note from This Repo

In the UI Tests stage, I currently use:

pytest tests/test_selenium.py -v --tb=short || true

That means UI test failures do not fail the whole pipeline. I kept this behavior to prevent local Jenkins environments (often missing browser tooling) from blocking all CI runs. For stricter CI, I can remove || true.

Trigger Jenkins Automatically on Every Push

I can enable auto-trigger in two common ways:

- Poll SCM in Jenkins with schedule like

* * * * *. - Configure a webhook to

http://<jenkins-host>/github-webhook/.

Polling is easiest for local learning setups. Webhooks are better for production CI responsiveness.

Step 7: CI Option 2 with GitHub Actions

I also configured .github/workflows/ci.yml, which runs in the cloud on every:

- Push to

main - Pull request targeting

main

Workflow jobs:

build-and-unit-testui-tests(depends on unit-test job)integration-complete(depends on both jobs)

Key implementation details:

- Uses

actions/setup-python@v5with Python 3.9. - Enables pip caching for faster repeated runs.

- Uses

browser-actions/setup-chrome@v1to match Chrome and ChromeDriver in CI. - Runs Selenium UI tests headlessly on

ubuntu-latest.

I can view full history in the repository’s Actions tab.

Jenkins vs GitHub Actions in This Project

| Topic | Jenkins | GitHub Actions |

|---|---|---|

| Setup effort | I install and maintain Jenkins myself | I only commit YAML and GitHub runs it |

| Infrastructure | Local machine or managed server | GitHub-hosted runners |

| Push integration | Polling/webhook setup needed | Built-in event trigger |

| Browser setup for UI tests | I manage Chrome/chromedriver on agents | Runner setup is mostly prepackaged |

| Best use case | Custom enterprise CI environments | Repos already hosted on GitHub |

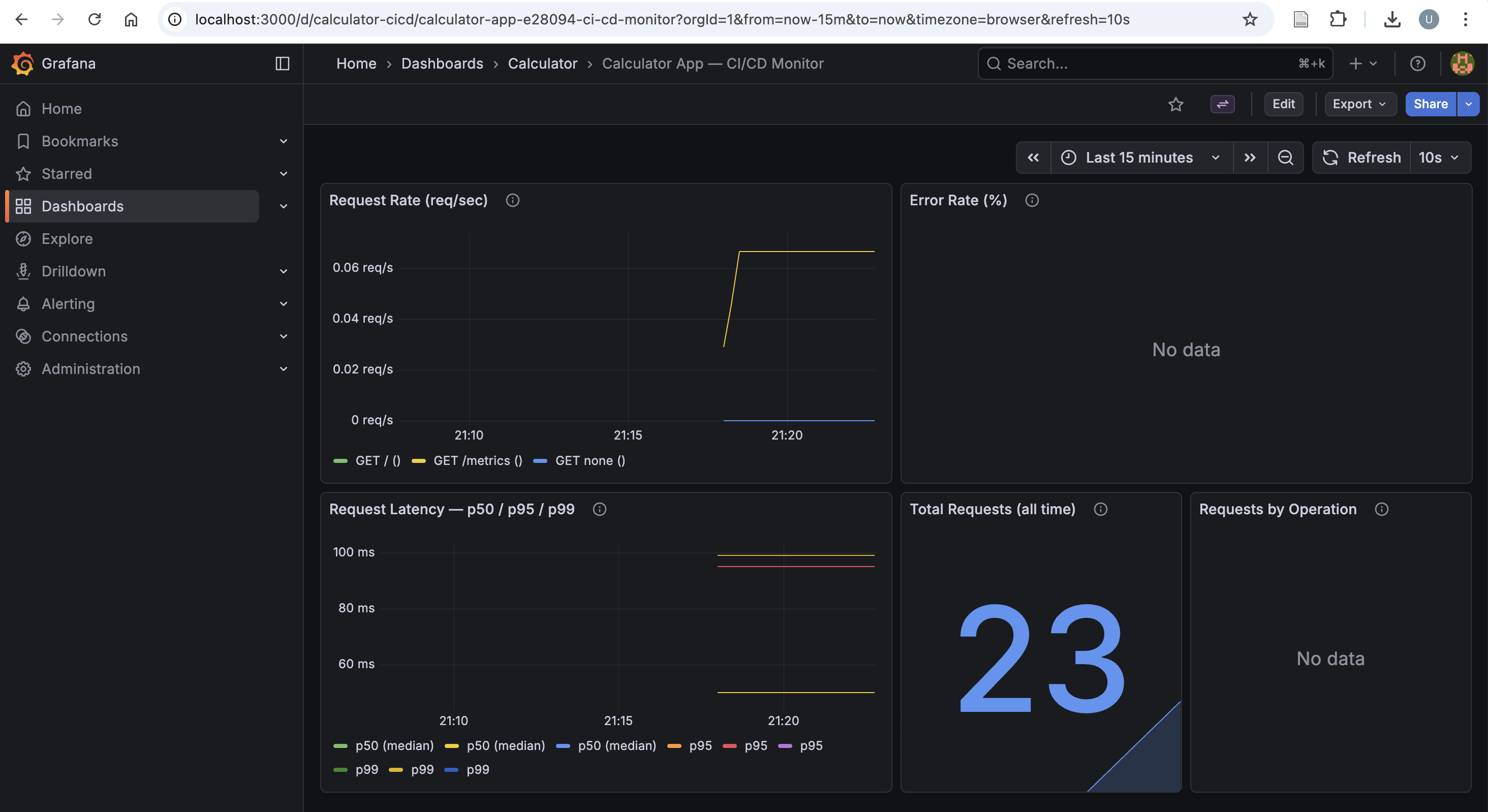

Step 8: Monitoring with Prometheus and Grafana

This is where I move from test-time confidence to runtime visibility.

Monitoring Architecture

FastAPI app (/metrics) → Prometheus scrape every 15s → Grafana dashboards

Docker Compose Services

docker-compose.yml starts:

app(calculator) on host5001mapped to container5000prometheuson9090grafanaon3000

I use port 5001 for containerized app so local make run on 5000 remains free.

Start Monitoring Stack

make monitor

URLs after startup:

- App:

http://localhost:5001 - Metrics:

http://localhost:5001/metrics - Prometheus:

http://localhost:9090 - Grafana:

http://localhost:3000(admin/admin)

Stop stack:

make monitor-down

Prometheus Config (monitoring/prometheus.yml)

I scrape:

- Prometheus itself (

localhost:9090) - FastAPI app service (

app:5000) at/metrics

Global scrape/evaluation interval is 15s.

Useful PromQL Queries I Use for Learning

# Request rate in the last minute

rate(http_requests_total[1m])

# 95th percentile latency

histogram_quantile(0.95, rate(http_request_duration_seconds_bucket[1m]))

# Error percentage

rate(http_requests_total{status_code=~"4..|5.."}[1m])

/ rate(http_requests_total[1m]) * 100

Grafana Provisioning

I pre-provision everything so no manual dashboard setup is needed:

- Datasource provisioning file points Grafana to

http://prometheus:9090. - Dashboard provider loads JSON from provisioning path.

- Dashboard JSON (

calculator.json) includes five panels:- Request rate

- Error rate (%)

- Latency p50/p95/p99

- Total requests

- Requests by operation

Grafana Dashboard Screenshot

End-to-End Local Runbook

# install deps

make install

# run app

make run

# run tests

make test-unit

make test

# run monitoring stack in docker

make monitor

# stop monitoring stack

make monitor-down

# clean caches

make clean

Tool Summary

| Tool | Role in this repository |

|---|---|

| Git | Tracks source changes and triggers CI |

| FastAPI | Serves the calculator UI and routes |

| uvicorn | Runs the FastAPI app as ASGI service |

| Jinja2 | Renders HTML templates |

| pytest | Runs unit and test orchestration |

| Selenium | Automates end-to-end browser checks |

| Jenkins | Local/self-managed CI pipeline |

| GitHub Actions | Cloud CI pipeline |

| Docker + Compose | Packages and runs app + monitoring stack |

| Prometheus | Scrapes and stores metrics time series |

| Grafana | Visualizes metrics in dashboards |

What I Plan to Add Next

My next steps for this repo are:

- Add a deploy stage (for example Ansible-based remote deployment).

- Replace single-host compose setup with Kubernetes manifests.

- Add alerts (Grafana or Prometheus alert rules) for non-zero error rates and latency spikes.

- Tighten Jenkins UI test policy by failing pipeline on Selenium failures in stable environments.

If you want to learn DevOps in a structured way, I suggest cloning this project and running each phase one by one instead of treating it as a black box.

Disclaimer of liability

The information provided by the Earth Inversion is made available for educational purposes only.

Whilst we endeavor to keep the information up-to-date and correct. Earth Inversion makes no representations or warranties of any kind, express or implied about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services or related graphics content on the website for any purpose.

UNDER NO CIRCUMSTANCE SHALL WE HAVE ANY LIABILITY TO YOU FOR ANY LOSS OR DAMAGE OF ANY KIND INCURRED AS A RESULT OF THE USE OF THE SITE OR RELIANCE ON ANY INFORMATION PROVIDED ON THE SITE. ANY RELIANCE YOU PLACED ON SUCH MATERIAL IS THEREFORE STRICTLY AT YOUR OWN RISK.